Twitter’s newest test could provide some long-awaited relief for anyone facing harassment on the platform.

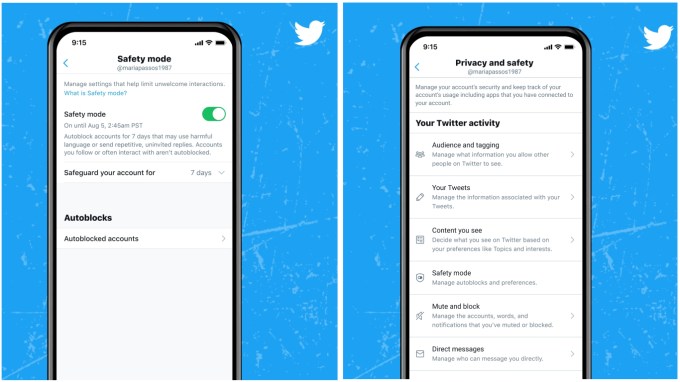

The new product test introduces a feature called “Safety Mode” that puts up a temporary line of defense between an account and the waves of toxic invective that Twitter is notorious for. The mode can be enabled from the settings menu, which toggles on an algorithmic screening process that filters out potential abuse that lasts for seven days.

“Our goal is to better protect the individual on the receiving end of Tweets by reducing the prevalence and visibility of harmful remarks,” Twitter Product Lead Jarrod Doherty said.

Safe Mode won’t be rolling out broadly — not yet, anyway. The new feature will first be available to what Twitter describes as a “small feedback group” of about 1,000 English language users.

In deciding what to screen out, Twitter’s algorithmic approach assesses a tweet’s content — hateful language, repetitive, unreciprocated mentions — as well as the relationship between an account and the accounts replying. The company notes that accounts you follow or regularly exchange tweets with won’t be subject to the blocking features in Safe Mode.

For anyone in the test group, Safety Mode can be toggled on in the privacy and safety options. Once enabled, an account will stay in the mode for the next seven days. After the seven day period expires, it can be activated again.

In crafting the new feature, Twitter says it spoke with experts in mental health, online safety and human rights. The partners Twitter consulted with were able to contribute to the initial test group by nominating accounts that might benefit from the feature, and the company hopes to focus on female journalists and marginalized communities in its test of the new product. Twitter says that it will start reaching out to accounts that meet the criteria of the test group — namely accounts that often find themselves on the receiving end of some of the platform’s worst impulses.

Earlier this year, Twitter announced that it was working on developing new anti-abuse features, including an option to let users “unmention” themselves from tagged threads and a way for users to prevent serial harassers from mentioning them moving forward. The company also hinted at a feature like Safety Mode that could give users a way to defuse situations during periods of escalating abuse.

Being “harassed off of Twitter” is, unfortunately, not that uncommon. When hate and abuse get bad enough, people tend to abandon Twitter altogether, taking extended breaks or leaving outright. That’s obviously not great for the company either, and while it’s been slow to offer real solutions to harassment, it’s obviously aware of the problem and working toward some possible solutions.

Twitter is eyeing new anti-abuse tools to give users more control over mentions

Twitter claims increased enforcement of hate speech and abuse policies in last half of 2019