Enables full control of data at ingest and evaluates data streams five to ten times faster than legacy technologies, helping boost security, ease management, and maximize the value of data

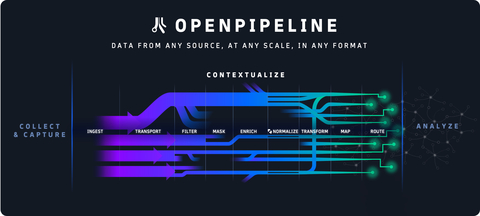

Dynatrace (NYSE: DT), the leader in unified observability and security, today announced the launch of OpenPipeline®, a new core technology that provides customers with a single pipeline to manage petabyte-scale data ingestion into the Dynatrace® platform to fuel secure and cost-effective analytics, AI, and automation.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20240131561989/en/

(Graphic: Business Wire)

Dynatrace OpenPipeline empowers business, development, security, and operations teams with full visibility into and control of the data they are ingesting into the Dynatrace platform while preserving the context of the data and the cloud ecosystems where they originate. Additionally, it evaluates data streams five to ten times faster than legacy technologies. As a result, organizations can better manage the ever-increasing volume and variety of data emanating from their hybrid and multicloud environments and empower more teams to access the Dynatrace platform’s AI-powered answers and automations without requiring additional tools.

“Data is the lifeblood of our business. It contains valuable insights and enables automation that frees our teams from low-value tasks,” said Alex Hibbitt, Engineering Director, SRE and Fulfillment, albelli-Photobox Group. “However, we face challenges in managing our data pipelines securely and cost effectively. Adding OpenPipeline to Dynatrace extends the value of the platform. It enables us to manage data from a broad spectrum of sources alongside real-time data collected natively in Dynatrace, all in one single platform, allowing us to make better-informed decisions.”

According to Gartner®, “Modern workloads generate increasing volumes—hundreds of terabytes and even petabytes each day—of telemetry originating from a variety of sources. This threatens to overwhelm the operators responsible for availability, performance, and security. The cost and complexity associated with managing this data can be more than $10 million per year in large enterprises.1”

Creating a unified pipeline to manage this data is challenging due to the complexity of modern cloud architectures. This complexity and the proliferation of monitoring and analytics tools within organizations can strain budgets. At the same time, organizations need to comply with security and privacy standards, such as GDPR and HIPAA, in relation to their data pipelines, analytics, and automations. Despite these challenges, stakeholders across organizations are seeking more data-driven insights and automation to make better decisions, improve productivity, and reduce costs. Therefore, they require clear visibility and control over their data while managing costs and maximizing the value of their existing data analytics and automation solutions.

Dynatrace OpenPipeline works with other core Dynatrace platform technologies, including the Grail™ data lakehouse, Smartscape® topology, and Davis® hypermodal AI, to address these challenges by delivering the following benefits:

- Petabyte scale data analytics: Leverages patent-pending stream processing algorithms to achieve significantly increased data throughputs at petabyte scale.

- Unified data ingest: Enables teams to ingest and route observability, security, and business events data–including dedicated Quality of Service (QoS) for business events–from any source and in any format, such as Dynatrace® OneAgent, Dynatrace APIs, and OpenTelemetry, with customizable retention times for individual use cases.

- Real-time data analytics on ingest: Allows teams to convert unstructured data into structured and usable formats at the point of ingest—for example, transforming raw data into time series or metrics data and creating business events from log lines.

- Full data context: Enriches and retains the context of heterogeneous data points—including metrics, traces, logs, user behavior, business events, vulnerabilities, threats, lifecycle events, and many others—reflecting the diverse parts of the cloud ecosystem where they originated.

- Controls for data privacy and security: Gives users control over which data they analyze, store, or exclude from analytics and includes fully customizable security and privacy controls, such as automatic and role-based PII masking, to help meet customers’ specific needs and regulatory requirements

- Cost-effective data management: Helps teams avoid ingesting duplicate data and reduces storage needs by transforming data into usable formats—for example, from XML to JSON—and enabling teams to remove unnecessary fields without losing any insights, context, or analytics flexibility.

“OpenPipeline is a powerful addition to the Dynatrace platform,” said Bernd Greifeneder, CTO at Dynatrace. “It enriches, converges, and contextualizes heterogeneous observability, security, and business data, providing unified analytics for these data and the services they represent. As with the Grail data lakehouse, we architected OpenPipeline for petabyte-scale analytics. It works with Dynatrace’s Davis hypermodal AI to extract meaningful insights from data, fueling robust analytics and trustworthy automation. Based on our internal testing, we believe OpenPipeline powered by Davis AI will allow our customers to evaluate data streams five to ten times faster than legacy technologies. We also believe that converging and contextualizing data within Dynatrace makes regulatory compliance and audits easier while empowering more teams within organizations to gain immediate visibility into the performance and security of their digital services.”

Dynatrace OpenPipeline is expected to be generally available for all Dynatrace SaaS customers within 90 days of this announcement, starting with support for logs, metrics, and business events. Support for additional data types will follow. Visit the Dynatrace OpenPipeline blog for more information.

About Dynatrace

Dynatrace (NYSE: DT) exists to make the world’s software work perfectly. Our unified platform combines broad and deep observability and continuous runtime application security with Davis® hypermodal AI to provide answers and intelligent automation from data at an enormous scale. This enables innovators to modernize and automate cloud operations, deliver software faster and more securely, and ensure flawless digital experiences. That’s why the world’s largest organizations trust the Dynatrace® platform to accelerate digital transformation.

Curious to see how you can simplify your cloud and maximize the impact of your digital teams? Let us show you. Sign up for a 15-day Dynatrace trial.

Cautionary Language Concerning Forward-Looking Statements

This press release includes certain “forward-looking statements” within the meaning of the Private Securities Litigation Reform Act of 1995, including statements regarding the capabilities of OpenPipeline, the expected benefits to organizations from using OpenPipeline, and the timing for when OpenPipeline is expected to be generally available. These forward-looking statements include all statements that are not historical facts and statements identified by words such as “will,” “expects,” “anticipates,” “intends,” “plans,” “believes,” “seeks,” “estimates,” and words of similar meaning. These forward-looking statements reflect our current views about our plans, intentions, expectations, strategies, and prospects, which are based on the information currently available to us and on assumptions we have made. Although we believe that our plans, intentions, expectations, strategies, and prospects as reflected in or suggested by those forward-looking statements are reasonable, we can give no assurance that the plans, intentions, expectations, or strategies will be attained or achieved. Actual results may differ materially from those described in the forward-looking statements and will be affected by a variety of risks and factors that are beyond our control, including the risks set forth under the caption “Risk Factors” in our Quarterly Report on Form 10-Q filed on November 2, 2023, and our other SEC filings. We assume no obligation to update any forward-looking statements contained in this document as a result of new information, future events, or otherwise.

| ____________________________ |

1 Gartner, Innovation Insight: Telemetry Pipelines Elevate the Handling of Operational Data, Gregg Siegfried, 20 July 2023. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved. |

View source version on businesswire.com: https://www.businesswire.com/news/home/20240131561989/en/

Contacts

Investor Contact:

Noelle Faris

VP, Investor Relations

Noelle.Faris@dynatrace.com

Media Relations:

Jerome Stewart

VP, Communications

Jerome.Stewart@dynatrace.com