Artificial intelligence (AI) has become the defining technology race of this decade, and Nvidia (NVDA) sits squarely at its center. Its GPUs are the critical engines powering everything from large-language models (LLMs) to data center-scale AI training. But as the tech industry pours hundreds of billions of dollars into AI infrastructure, a surprisingly technical yet enormously consequential question has ignited one of the most intense debates on Wall Street: How fast does an AI chip actually depreciate, and why does it matter?

Michael Burry, the famed “Big Short” investor, has thrust this accounting issue into the spotlight, arguing that hyperscalers may be assuming unrealistically long useful lives for Nvidia’s GPUs. If he’s right, it could mean that major technology companies are understating expenses, overstating earnings, and setting themselves up for sizable write-downs in a few years. Others have joined the debate, scrutinizing everything from resale values to replacement cycles to how quickly new Nvidia AI chips make previous generations obsolete.

So, how quickly do AI chips depreciate? What does the available data show? And more importantly, why does this matter so much for Nvidia stock?

Let’s break it all down.

About Nvidia Stock

Nvidia is a premier technology firm known for its expertise in graphics processing units and artificial intelligence solutions. The company is renowned for its pioneering contributions to gaming, data centers, and AI-driven applications. NVDA’s technological solutions are developed around a platform strategy that combines hardware, systems, software, algorithms, and services to provide distinctive value. The chipmaker has a market cap of $4.49 trillion, making it the world’s most valuable company.

Shares of the AI darling have gained 32% on a year-to-date (YTD) basis. NVDA stock continues to consolidate just above the key $180 support level. Earlier this week, President Trump said he would allow the company to export its H200 chip to approved customers in China and other countries in exchange for a 25% surcharge, but the news didn’t lift the stock.

How Quickly Do AI Chips Really Depreciate?

A debate has emerged among investors over the accounting assumptions major tech companies are using for the Nvidia AI chips that power their data centers. It may seem that something as banal as the right depreciation schedule for fixed assets wouldn’t warrant much attention, but when a handful of the world’s largest companies are pouring hundreds of billions into AI infrastructure, it actually does. And when famed “Big Short” investor Michael Burry is the one driving the debate, it becomes impossible to ignore.

In accounting, depreciation refers to spreading the cost of a tangible asset over its expected useful life. Essentially, it allows companies to avoid recording the full cost of an asset as an expense in the year it’s purchased and instead distribute that cost over the asset’s useful life. A longer depreciation period reduces the expense recognized each year, which in turn boosts reported earnings.

And this is where things get particularly interesting. Burry argued in a post on X last month that hyperscalers are understating depreciation costs by assuming chip life cycles that are longer than what he believes is realistic. “Understating depreciation by extending useful life of assets artificially boosts earnings - one of the more common frauds of the modern era. Massively ramping capex through purchase of Nvidia chips/servers on a 2-3 yr product cycle should not result in the extension of useful lives of compute equipment. Yet this is exactly what all the hyperscalers have done,” Burry wrote. Burry estimated that from 2026 to 2028, this accounting method could understate depreciation by about $176 billion, effectively inflating reported earnings across the industry.

In fact, hyperscalers are currently using depreciation schedules of roughly six years for GPUs. Meta Platforms raised the estimated useful lives of most of its servers and network equipment to 5.5 years this year, which lowered its depreciation expense by $2.3 billion over the first nine months of 2025. Alphabet and Microsoft assign six-year useful lives to similar assets, while Amazon had moved up to six years by 2024 but reduced the estimate to five years for some servers and networking gear this year.

It’s worth noting that setting a depreciation schedule for AI GPUs is challenging because they’re still relatively new to the market, and much of what appears in companies’ financial statements relies on estimates, assumptions, and informed guesswork. So, if the actual useful life of these GPUs ends up being shorter than what companies are assuming, the AI industry could face a painful earnings hit in a few years. But I don’t believe that scenario is likely.

Methods Matter

The logical explanation may actually lie in the depreciation method being used. While straight-line depreciation is the prevailing method, I believe AI GPUs should instead be depreciated using an accelerated method. Let me break this down. The accelerated depreciation method is an accounting technique that records a greater portion of an asset’s cost as an expense in the earlier years of its useful life and less in the later years. And this method is a perfect fit for AI GPUs, especially as Nvidia has shifted to an annual product cycle. So if a company buys Nvidia’s newest AI chip, the depreciation expense would be higher in the early years, when the economic benefit is realized more quickly, and lower in the later years. And there’s already some data that supports this thesis. According to Silicon Data, which tracks Nvidia chip pricing, an H100 system in its third year of use was recently resold for about 45% of the price of a new H100. Also, Nvidia CEO Jensen Huang said in March that once the next-generation Blackwell chips begin shipping, “you couldn’t give Hoppers away.” While he was joking, it still underscores a key point: once Nvidia launches a new chip, the value of its predecessor declines due to relative obsolescence (and normal wear and tear, assuming the previous-generation chip was purchased some time before the latest model was released), offering even stronger justification for using an accelerated depreciation method.

But I also want to note that a new Nvidia GPU doesn’t make its predecessors immediately useless, since older processors can be repurposed for less demanding AI inference and other computing tasks or resold into emerging markets. And software improvements can extend their useful life. Bernstein analyst Stacy Rasgon noted that even five-year-old Nvidia A100 GPUs can still generate “comfortable” profit margins, adding that industry sources report A100 capacity at GPU cloud providers is nearly fully booked. CoreWeave’s (CRWV) management also said last month that demand for older GPUs remains strong, noting it was able to renew an expiring H100 GPU contract (a three-year-old chip) at within 5% of the previous contract price. And this was particularly interesting given that some market analyses have shown declines in on-demand H100 rental prices across the broader cloud GPU market. CoreWeave’s management noted that demand for H100s remains strong because the software libraries are mature and engineers are deeply familiar with them.

With that, the available data suggest that a five- to six-year depreciation lifespan for AI GPUs is reasonable. And here’s what Nvidia itself said on the matter in a private memo released a few weeks ago: “NVIDIA’s customers depreciate GPUs over 4-6 years based on real-world longevity and utilization patterns. Older GPUs such as A100s (released in 2020) continue to run at high utilization and generate strong contribution margins, retaining meaningful economic value well beyond the 2-3 years claimed by some commentators.”

Why Does the Speed of AI Chip Depreciation Matter for Nvidia Stock?

The speed of Nvidia’s AI chips’ depreciation matters more for NVDA stock than many investors might assume. That’s not like concerns over Nvidia’s Days Sales Outstanding, which were easily debunked in one of my recent NVDA articles. As mentioned earlier, if the actual useful life of AI GPUs is much shorter than what hyperscalers are currently assuming—say, just two to three years, as Burry suggests—these companies would eventually need to recognize the difference as an impairment charge, delivering a significant blow to their earnings.

This could result in reduced purchasing power down the road or hesitation to buy the next generation of chips at today’s pace, which would directly impact Nvidia’s revenue and growth. But I don’t see that scenario as likely, given the data we have today. The next key input will come with the launch of Nvidia’s next-generation Rubin GPU, slated for the second half of 2026.

What Do Analysts Expect for NVDA Stock?

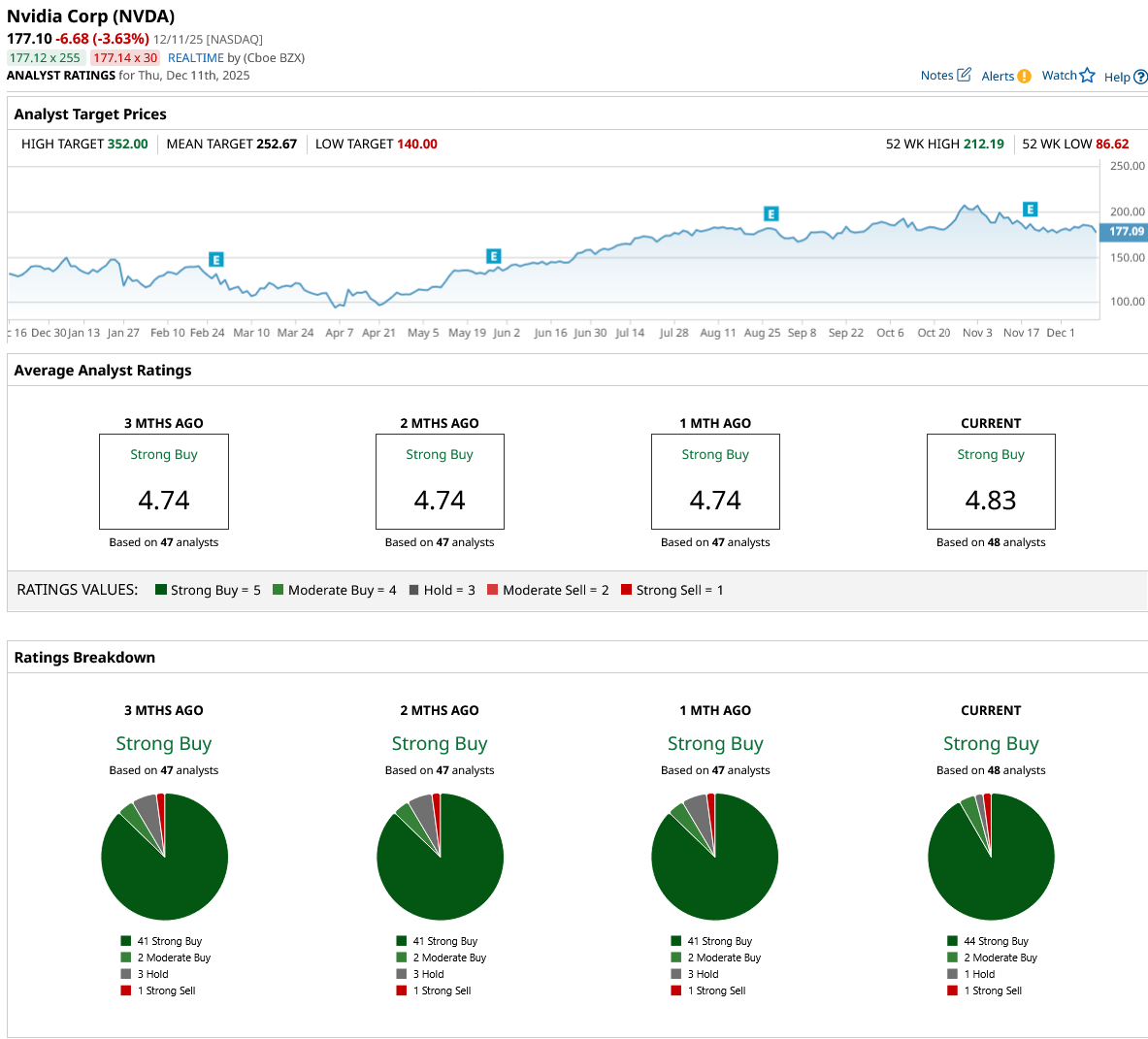

Nvidia’s stock has a top-tier consensus rating of “Strong Buy.” Of the 48 analysts covering the stock, 44 assign a “Strong Buy” rating, two rate it a “Moderate Buy,” one recommends holding, and one issues a “Strong Sell” rating. The mean price target for NVDA stock stands at $252.67, indicating a potential upside of 43% from current levels.

On the date of publication, Oleksandr Pylypenko had a position in: NVDA . All information and data in this article is solely for informational purposes. For more information please view the Barchart Disclosure Policy here.

More news from Barchart

- Palantir Just Scored a Deal to Build Nuclear Submarines. Should You Build a Position in PLTR Stock?

- Carvana Stock Is Joining the S&P 500. Should You Buy Shares Now?

- AeroVironment Is Supposed to Be the Next Palantir, But Its Earnings Disappointed in Q2. How Should You Play AVAV Stock?

- How Fast Does an AI Chip Depreciate, and Why Does It Matter for Nvidia Stock?