Modular AI, an innovative startup spearheaded by veterans from Apple and Google, has recently made waves in the financial markets and the broader AI industry with the announcement of a substantial $250 million funding round. This significant capital injection, which occurred on Wednesday, September 24, 2025, is explicitly earmarked for a bold mission: to dismantle the formidable dominance of (NASDAQ: NVDA) Nvidia in the artificial intelligence (AI) chip market. The move signals a burgeoning belief among investors that the next frontier of AI innovation lies not just in hardware, but critically, in the software layer that orchestrates it.

This strategic funding round elevates Modular AI's total capital raised to an impressive $380 million and its valuation to $1.6 billion, nearly tripling its worth in just two years. Led by Thomas Tull's US Innovative Technology fund, with continued support from key existing investors such as (NASDAQ: GOOG) GV (Google Ventures), General Catalyst, and Greylock, the investment underscores a growing confidence in Modular's vision to create a hardware-agnostic AI software ecosystem. The immediate implications are profound, potentially reshaping investment foci, intensifying scrutiny on Nvidia's long-standing software moat, and opening new avenues for other chipmakers and AI developers alike.

Modular AI's $250 Million War Chest: A Direct Challenge to an AI Giant

Modular AI's recent $250 million funding round marks a pivotal moment in the escalating battle for supremacy in the AI computing landscape. The round, which substantially boosts the company's financial war chest, was spearheaded by Thomas Tull's US Innovative Technology fund, with significant contributions from DFJ Growth and ongoing backing from existing heavyweights like (NASDAQ: GOOG) GV (Google Ventures), General Catalyst, and Greylock. This investment has propelled Modular's valuation to an impressive $1.6 billion, reflecting a sharp increase from its valuation just two years prior and signaling robust investor confidence in its disruptive potential.

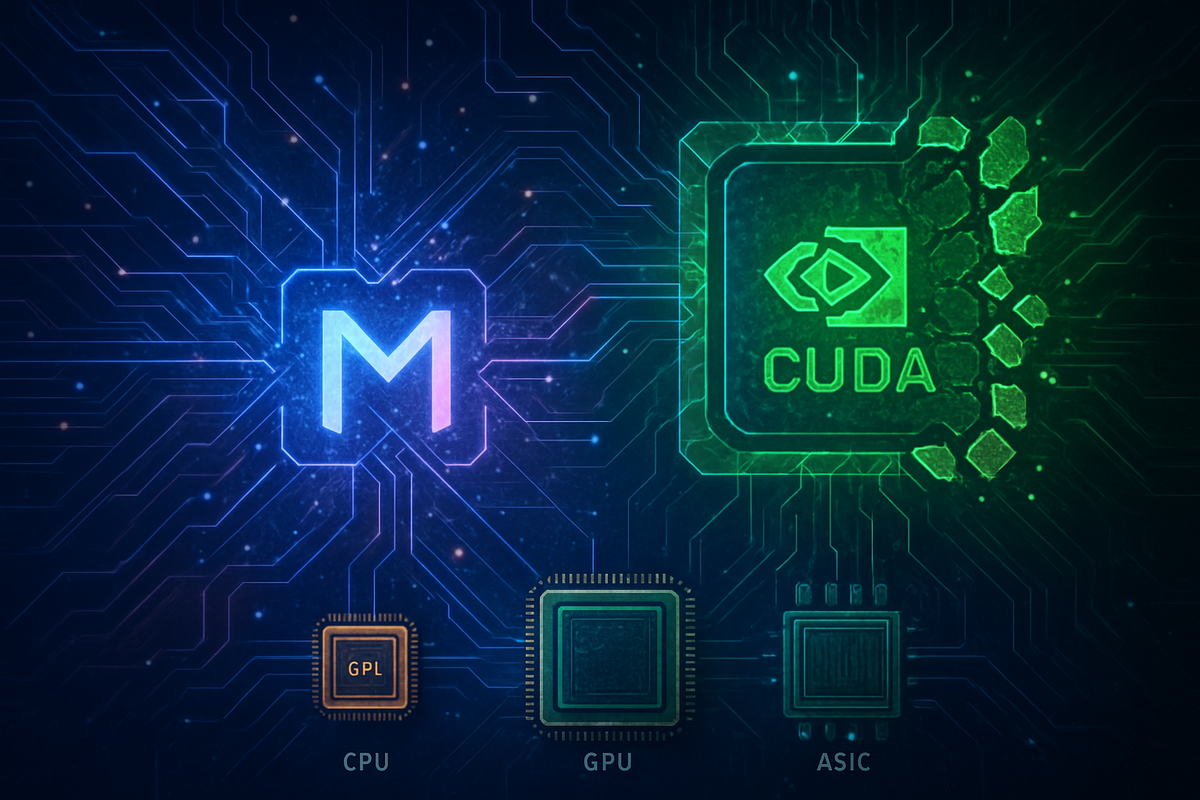

The core of Modular's strategy is to directly confront Nvidia's (NASDAQ: NVDA) entrenched position, which currently accounts for over 80% of the high-end AI chip market, largely due to its proprietary CUDA software ecosystem. CUDA has been a significant barrier to entry for competitors, effectively locking millions of developers into Nvidia's hardware. Modular aims to break this lock-in by developing what it calls a "neutral software layer" or an "AI hypervisor." This innovative platform is designed to allow developers to run their AI applications seamlessly across a diverse array of computer chips—including those from (NASDAQ: NVDA) Nvidia, (NASDAQ: AMD) AMD, and (NASDAQ: AAPL) Apple—without the need for extensive code rewriting for each specific hardware architecture.

Chris Lattner, CEO and co-founder of Modular, a distinguished engineer with a background at (NASDAQ: AAPL) Apple and (NASDAQ: GOOG) Google, clarifies that their objective is not to "push down Nvidia" but rather to "enable a level playing field" for other competitors in the AI hardware space. The company's platform, built by a team of veteran engineers, features the MAX framework and the Mojo programming language. Mojo is touted as being significantly faster than Python and aims to unify the functionalities of Python and C, offering a powerful tool for AI development. Modular has already demonstrated the platform's capability to deliver state-of-the-art performance on both Nvidia and AMD hardware from a single, unified stack, including support for Nvidia’s latest B200, H200, H100, A100, L40S GPUs, and Grace Superchips. This impressive compatibility underscores the immediate threat and opportunity Modular presents to the existing AI hardware paradigm.

Initial market reactions suggest a mix of anticipation and caution. While investors are keen on supporting ventures that promise to democratize AI and reduce reliance on a single vendor, the challenge of dislodging an ecosystem as deeply integrated as Nvidia's CUDA is immense. However, the substantial funding and the caliber of Modular's engineering team indicate a serious contender has entered the arena, prompting a closer look at the competitive dynamics within the AI chip and software sectors. The announcement on September 24, 2025, positions Modular as a key player to watch in the evolving narrative of AI infrastructure.

Shifting Sands: Companies That Stand to Win or Lose

Modular AI's ambitious play to create a hardware-agnostic software layer presents a fascinating dynamic of potential winners and losers across the AI chip and cloud computing landscapes. The startup's "Switzerland" strategy, aiming to free AI development from vendor lock-in, could significantly re-calibrate market positions and strategic priorities for several public companies.

Foremost among potential beneficiaries is (NASDAQ: AMD) Advanced Micro Devices, Nvidia's primary competitor in the GPU market. AMD has been diligently building its Instinct GPU line and ROCm software platform to challenge (NASDAQ: NVDA) Nvidia's CUDA. Modular's platform, by simplifying GPU programming across different hardware, could dramatically accelerate the adoption of AMD's AI accelerators, boosting its market share and potentially its stock performance. Similarly, (NASDAQ: INTC) Intel, a historical CPU powerhouse now aggressively pushing its Gaudi accelerators and integrated AI engines, stands to gain. A truly hardware-agnostic layer could make Intel's AI offerings more accessible and appealing to developers, helping it carve out a more substantial presence in the AI chip arena. Intel's recent strategic partnership with (NASDAQ: NVDA) Nvidia to co-design custom AI processors also indicates a proactive stance towards an integrated, open approach, aligning with the spirit of hardware agnosticism.

The major cloud providers, including (NASDAQ: GOOGL) Alphabet (Google), (NASDAQ: AMZN) Amazon, and (NASDAQ: MSFT) Microsoft, are also poised to benefit significantly. These hyperscalers have heavily invested in developing their own custom AI chips—Google with its Tensor Processing Units (TPUs), Amazon Web Services (AWS) with Trainium and Inferentia, and Microsoft with its Azure Maia AI Accelerator and Azure Cobalt CPU. Modular's platform could make it easier for developers to deploy AI models on these proprietary chips, enhancing their appeal as cost-effective and high-performance alternatives to generic GPUs. This would strengthen their competitive position in the cloud AI infrastructure market, reduce their reliance on third-party hardware, and optimize their operational costs. Google's continuous investment in TPUs, Amazon's in Anthropic, and Microsoft's in building its own chip cluster all underscore their commitment to owning the full AI stack and leveraging diversified hardware.

On the other side of the coin, while Modular AI states its goal is not to "push down Nvidia" but to "enable a level playing field," (NASDAQ: NVDA) Nvidia stands as the primary company whose absolute dominance could be challenged. Nvidia's formidable market position is deeply rooted not just in its cutting-edge hardware but critically in its proprietary CUDA software ecosystem, which has created a powerful developer lock-in. If Modular's hardware-agnostic software layer achieves widespread adoption, it could diminish the competitive moat of CUDA, allowing AI workloads to run efficiently on non-Nvidia hardware. This could lead to an erosion of Nvidia's market share and pricing power, as increased competition from other chipmakers, enabled by software portability, fragments the AI chip market. While Nvidia's stock has seen unprecedented growth due to the AI boom, any perceived threat to its software advantage could introduce investor uncertainty and potentially moderate its growth trajectory, prompting strategic adaptations such as further diversification or deeper alliances, as evidenced by its investment in Intel. Interestingly, Modular also serves Nvidia as a chipmaker customer, and Nvidia has expressed support for Modular's efforts, suggesting a complex interplay of competition and potential collaboration.

Wider Significance and Industry Ripple Effects: A Paradigm Shift in AI

Modular AI's substantial funding and its explicit challenge to (NASDAQ: NVDA) Nvidia's AI chip hegemony represent far more than just another startup success story; it embodies a fundamental shift in the broader AI industry. This event is a powerful manifestation of several key trends, particularly the accelerating move towards hardware diversification and the proliferation of custom silicon. For years, Nvidia's vertically integrated hardware-software stack, centered around its powerful GPUs and proprietary CUDA ecosystem, offered unparalleled performance but also created a significant vendor lock-in. Modular's "AI hypervisor" approach directly confronts this, promoting an ecosystem where AI software is portable across diverse hardware architectures, effectively decoupling the software from specific hardware.

This decoupling aligns perfectly with the growing trend among hyperscale cloud providers like (NASDAQ: GOOGL) Google, (NASDAQ: AMZN) Amazon, and (NASDAQ: MSFT) Microsoft, as well as major AI players, to invest heavily in designing their own custom AI chips. Their motivation is clear: to reduce reliance on a single vendor, optimize performance and power efficiency for specific AI workloads, and mitigate the "Nvidia tax" associated with high GPU margins. Modular's platform, by enabling seamless operation across these varied chips, further validates and accelerates this custom silicon movement, fostering an environment where innovation isn't constrained by a single hardware provider. The slowing of traditional silicon scaling has also propelled the industry towards innovative approaches like modular chiplet designs and advanced packaging, making hardware agnosticism increasingly crucial.

The ripple effects across the AI ecosystem are profound. For (NASDAQ: NVDA) Nvidia, while Modular supports its hardware and even counts Nvidia as a customer, the increased competition from a truly open ecosystem will undoubtedly intensify pressure on its GPU business. This could compel Nvidia to further innovate on hardware performance, diversify its offerings, and potentially reconsider aspects of its proprietary software strategy to maintain its formidable edge. Conversely, for other chipmakers like (NASDAQ: AMD) AMD and (NASDAQ: INTC) Intel, Modular presents a substantial opportunity to gain market share by effectively neutralizing the CUDA lock-in, making their AI accelerators more attractive. Cloud providers, already Modular customers like (NYSE: ORCL) Oracle and (NASDAQ: AMZN) Amazon, will benefit from offering greater flexibility and potentially more cost-effective solutions to their AI clients, fostering a more competitive cloud AI landscape. Ultimately, the biggest beneficiaries are AI developers, who gain freedom from vendor lock-in, enabling them to optimize workloads across the best available hardware without extensive code rewriting, thereby accelerating development and democratizing AI.

From a regulatory standpoint, Modular AI's push for a "level playing field" is likely to be welcomed by regulators concerned with market concentration and antitrust issues surrounding Nvidia's extensive dominance. This could encourage calls for more open standards and greater interoperability, benefiting security, transparency, and data privacy. The concept of "sovereign AI," where nations develop independent AI capabilities, also aligns with Modular's hardware-agnostic platform, as it can run on domestically produced chips. Historically, this challenge to an entrenched giant echoes precedents like VMware's disruption of server infrastructure by breaking hardware lock-in, or the antitrust concerns surrounding Microsoft's market dominance in the 1990s. It signifies a technological revolution akin to the birth of the personal computer, where new approaches fundamentally reshaped established industries, highlighting that innovation often thrives at the intersection of software and hardware, pushing industries towards a multi-vendor, more resilient future.

What Comes Next: Navigating the Evolving AI Landscape

Modular AI's substantial funding and its stated intent to disrupt (NASDAQ: NVDA) Nvidia's dominance herald a period of intense innovation and strategic repositioning across the AI chip and software markets. In the short term, Modular's "AI hypervisor" is poised to intensify competition in the AI software layer, offering developers and enterprises greater choice and flexibility in selecting AI hardware. This immediate impact will likely put pressure on Nvidia's long-term pricing power and accelerate the adoption of software designed to support diverse hardware, as evidenced by cloud providers like (NYSE: ORCL) Oracle and (NASDAQ: AMZN) Amazon already leveraging Modular's platform. The focus will be on delivering significant performance gains and cost reductions, with Modular's claims of up to 70% latency reduction and 80% cost savings providing a compelling immediate value proposition.

Looking further ahead, the long-term possibilities point towards a genuinely multi-vendor AI hardware ecosystem. Modular AI's success could erode Nvidia's market share, particularly in the burgeoning AI inference sector, leading to a decentralization of AI compute. This vision, where Modular becomes the "VMware for the AI era," could make AI more accessible and affordable, fostering broader adoption. The market will likely see continued emphasis on energy efficiency, advanced interconnectivity, and specialized silicon tailored for specific AI workloads. Modular's planned expansion from AI inference into the more compute-intensive AI training market further signals a comprehensive challenge across the entire AI lifecycle.

Key players will be forced into strategic pivots and adaptations. (NASDAQ: NVDA) Nvidia, while still a dominant force, will likely broaden its strategy beyond just hardware, emphasizing a holistic "AI Factories" approach that integrates its software ecosystem, networking solutions, and ventures into new domains like sovereign AI and robotics. Its recent $5 billion investment in (NASDAQ: INTC) Intel, alongside a collaboration to integrate NVLink and RTX chiplets into Intel's x86 SOCs, signals a strategic partnership focused on AI inference and edge computing, rather than direct competition in large-scale AI training. (NASDAQ: INTC) Intel, meanwhile, is pivoting towards a foundry business model, aiming to produce "every AI chip in the industry," while (NASDAQ: AMD) AMD continues its aggressive push with its Instinct GPUs and expanding its open-source ROCm software ecosystem. Hyperscalers like (NASDAQ: GOOGL) Google, (NASDAQ: AMZN) Amazon, and (NASDAQ: MSFT) Microsoft will further invest in their custom AI silicon to reduce reliance on external vendors and control costs, diversifying their supply chains and leveraging platforms like Modular's to optimize their varied hardware offerings.

New market opportunities are emerging rapidly, including the demand for unified AI compute layers, specialized AI inference solutions, and edge AI capabilities. The need for energy-efficient chips, custom ASICs, and advanced packaging technologies will also drive innovation. However, significant challenges remain, notably Nvidia's deeply entrenched CUDA ecosystem, the high development costs of advanced AI chips, a persistent talent shortage, and geopolitical factors impacting supply chains. Potential scenarios range from a fragmented hardware market with unified software (where Modular thrives) to Nvidia's ecosystem dominance enduring through strategic adaptation, or even hyperscalers fully vertically integrating their AI stacks. The ultimate outcome will depend on Modular's ability to drive widespread adoption, the responsiveness of established players, and the evolving demands of the global AI landscape.

A Comprehensive Wrap-up: Modular AI's Market Reshaping Ambition

Modular AI's recent $250 million funding round is far more than a mere financial transaction; it represents a watershed moment signaling a profound shift in the artificial intelligence market. The substantial capital infusion, which catapults Modular's valuation to $1.6 billion and brings its total raised to $380 million, underscores a burgeoning market conviction in a future where AI development is hardware-agnostic and software-defined. This investment validates the urgent need for a unified AI compute layer, often likened to "VMware for the AI era," directly challenging the long-standing model where AI innovation is tightly coupled with specific hardware architectures, particularly (NASDAQ: NVDA) Nvidia's GPUs and CUDA software.

The key takeaway is Modular's audacious goal to democratize AI development by creating a "neutral software layer" that allows AI applications to run seamlessly across any chip – CPUs, GPUs, ASICs, and custom silicon – without extensive code rewriting. This approach, driven by its MAX framework and the high-performance Mojo programming language, promises significant latency reductions and cost savings, as already demonstrated with major cloud providers like (NYSE: ORCL) Oracle and (NASDAQ: AMZN) Amazon. By expanding its focus from AI inference into the more capital-intensive AI training market, Modular AI is positioning itself to disrupt Nvidia across the entire AI lifecycle, aiming to foster a truly multi-vendor hardware future.

Moving forward, the market can expect intensified competition in AI infrastructure, with a greater emphasis on modularity, flexibility, and open standards. Modular AI's lasting impact could fundamentally alter how AI models are developed, deployed, and scaled, accelerating innovation, reducing costs, and increasing efficiency across the board. This shift will likely spur other companies to develop similar hardware-abstracting solutions, further decentralizing AI compute and fostering a more resilient, diversified AI supply chain.

For investors, the coming months will be crucial. Monitor the adoption rate of Modular AI's Mojo programming language within the developer community, as widespread usage is key to establishing its software ecosystem. Observe (NASDAQ: NVDA) Nvidia's strategic responses, including any shifts in CUDA licensing or new open-source initiatives, as they adapt to this new competitive landscape. Keep a close eye on Modular AI's expansion into the AI training market, looking for concrete product developments, performance benchmarks, and significant customer wins. Finally, assess the broader market's acceptance of modular and hardware-agnostic AI solutions; any significant shifts in market share away from single-vendor, hardware-locked ecosystems will be a strong indicator of Modular AI's transformative and lasting impact on the financial markets and the future of artificial intelligence.

This content is intended for informational purposes only and is not financial advice