SambaNova Launches the World’s Fastest AI Platform

SambaNova Cloud runs Llama 3.1405B at 132 tokens per second at full precision – available to developers today

-

SambaNova Systems CEO, Rodrigo Liang, says:

- “Enterprise customers want versatility – 70B at lightning speeds for agentic AI systems, and the highest fidelity 405B model for when they need the best results. SambaNova Cloud is the only platform that offers both today.”

- “Only SambaNova is running 405B – the best open-source model created – at full precision and at 132 tokens per second.”

Today, SambaNova Systems, provider of the fastest and most efficient chips and AI models, announced SambaNova Cloud, the world’s fastest AI inference service enabled by the speed of its SN40L AI chip. Developers can log on for free via an API today — no waiting list — and create their own generative AI applications using both the largest and most capable model, Llama 3.1 405B, and the lightning-fast Llama 3.1 70B. SambaNova Cloud runs Llama 3.1 70B at 461 tokens per second (t/s) and 405B at 132 t/s at full precision.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20240910319006/en/

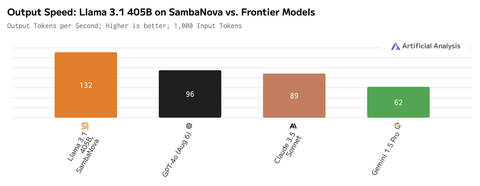

"Artificial Analysis has independently benchmarked SambaNova as achieving record speeds of 132 output tokens per second on their Llama 3.1 405B cloud API endpoint. This is the fastest output speed available for this level of intelligence across all endpoints tracked by Artificial Analysis, exceeding the speed of the frontier models offered by OpenAI, Anthropic, and Google. SambaNova’s Llama 3.1 endpoints will support speed-dependent AI use-cases, including for applications that require real-time responses or leverage agentic approaches to using language models.” George Cameron, Co-Founder at Artificial Analysis. (Graphic: Artificial Analysis)

“SambaNova Cloud is the fastest API service for developers. We deliver world record speed and in full 16-bit precision – all enabled by the world’s fastest AI chip,” said Rodrigo Liang, CEO of SambaNova Systems. “SambaNova Cloud is bringing the most accurate open source models to the vast developer community at speeds they have never experienced before.”

This year, Meta launched Llama 3.1 in three form factors — 8B, 70B, and 405B. The 405B model is the crown jewel for developers, offering a highly competitive alternative to the best closed-source models from OpenAI, Anthropic, and Google. Meta’s Llama 3.1 models are the most popular open-source models, and Llama 3.1 405B is the most intelligent, according to Meta, offering flexibility in how the model can be used and deployed.

The Highest Fidelity Model – SambaNova Runs 405B at 132 T/S

“Competitors are not offering the 405B model to developers today because of their inefficient chips. Providers running on Nvidia GPUs are reducing the precision of this model, hurting its accuracy, and running it at unusably slow speeds,” continued Liang. “Only SambaNova is running 405B — the best open-source model created — at full precision and at 132 tokens per second.”

Llama 3.1 405B is an extremely large model — the largest frontier open-weights model released to date. The size means the cost and complexity of deploying it are high, and the speed at which it’s served is slower compared to smaller models. SambaNova’s SN40L chips reduce this cost and complexity compared to Nvidia H100s and lessen the speed trade-off of the model as the chips serve it at higher speeds.

"Agentic workflows are delivering excellent results for many applications. Because they need to process a large number of tokens to generate the final result, fast token generation is critical. The best open weights model today is Llama 3.1 405B, and SambaNova is the only provider running this model at 16-bit precision and at over 100 tokens/second. This impressive technical achievement opens up exciting capabilities for developers building using LLMs," stated Dr. Andrew Ng, Founder of DeepLearning.AI, Managing General Partner at AI Fund, and an Adjunct Professor at Stanford University’s Computer Science Department.

Independent Benchmarks Rank SambaNova Cloud as the Fastest AI Inference Platform

"Artificial Analysis has independently benchmarked SambaNova as achieving record speeds of 132 output tokens per second on their Llama 3.1 405B cloud API endpoint. This is the fastest output speed available for this level of intelligence across all endpoints tracked by Artificial Analysis, exceeding the speed of the frontier models offered by OpenAI, Anthropic, and Google. SambaNova’s Llama 3.1 endpoints will support speed-dependent AI use-cases, including for applications that require real-time responses or leverage agentic approaches to using language models,” said George Cameron, Co-Founder at Artificial Analysis.

The First Platform for Agentic AI – SambaNova Runs Llama 3.1 70B at 461 T/S

Llama 3.1 70B is considered the highest fidelity model for agentic AI use cases, which require high speeds and low latency. Its size makes it suitable for fine-tuning, producing expert models that can be combined in multi-agent systems suitable for solving complex tasks.

SambaNova Cloud allows developers to run LLama 3.1 70B models at 461 t/s and build agentic applications that run at unparalleled speed.

“As a leading proponent of interactive AI-powered Sales Enablement SaaS solutions, Bigtincan is excited to partner with SambaNova. With SambaNova's impressive performance, we can achieve up to 300% increased efficiency in Bigtincan SearchAI, enabling us to run the most powerful open-source models like Llama in all its configurations and agentic AI workflows with unparalleled speed and effectiveness,” said David Keane, CEO of Bigtincan Solutions, an ASX listed SaaS company.

"As the leading platform building autonomous coding agents, Blackbox AI is excited to collaborate with SambaNova. By integrating SambaNova Cloud, we're taking our platform to the next level, enabling millions of developers using Blackbox AI today to build products at unprecedented speeds—further solidifying our position as the go-to platform for developers worldwide,” stated Robert Rizk, CEO of Blackbox AI.

"As AI shifts from impressive demos to real-world business needs, cost and performance are front and center," said Alex Ratner, CEO and co-founder of Snorkel AI. "SambaNova Cloud will make it easier and faster for developers to build with Llama's impressive 405B model. SambaNova's affordable, high speed inference, combined with Snorkel's programmatic data-centric AI development is a fantastic model for creating AI success."

SambaNova’s Fast API has seen rapid adoption since its launch in early July. With SambaNova Cloud, developers can bring their own checkpoints, fast switch between Llama models, automate workflows using a chain of AI prompts, and utilize existing fine-tuned models with fast inference speed. It will quickly become the go-to inference solution for developers who demand the power of 405B, total flexibility, and speed.

SambaNova Cloud is available today across three tiers: Free, Developer, and Enterprise.

- The Free Tier (available today): offers free API access to anyone who logs in

- The Developer Tier (available by end of 2024): enables developers to build models with higher rate limits with Llama 3.1 8B, 70B, and 405B models

- The Enterprise Tier (available today): provides enterprise customers with the ability to scale with higher rate limits to power production workloads

SambaNova Cloud’s impressive performance is made possible by the SambaNova SN40L AI chip. With its unique, patented dataflow design and three-tier memory architecture, the SN40L can power AI models faster and more efficiently.

About SambaNova Systems

Customers turn to SambaNova to quickly deploy state-of-the-art generative AI capabilities within the enterprise. Our purpose-built enterprise-scale AI platform is the technology backbone for the next generation of AI computing.

Headquartered in Palo Alto, California, SambaNova Systems was founded in 2017 by industry luminaries, and hardware and software design experts from Sun/Oracle and Stanford University. Investors include SoftBank Vision Fund 2, funds and accounts managed by BlackRock, Intel Capital, GV, Walden International, Temasek, GIC, Redline Capital, Atlantic Bridge Ventures, Celesta, and several others. Visit us at sambanova.ai or contact us at info@sambanova.ai. Follow SambaNova Systems on Linkedin and on X.

View source version on businesswire.com: https://www.businesswire.com/news/home/20240910319006/en/

Contacts

Press Contact:

Virginia Jamieson

Head of External Communications, SambaNova Systems

virginia.jamieson@sambanova.ai

650-279-8619

More News

View More

Recent Quotes

View More

Quotes delayed at least 20 minutes.

By accessing this page, you agree to the Privacy Policy and Terms Of Service.