Privacy policies are no longer just fine print. In an age of data-driven decisions, constant cookie banners, and heightened public concern over data misuse, how companies communicate about privacy matters more than ever. But what makes a privacy policy trustworthy, and what do users actually care about when they see one?

Ziqi Zhong, a PhD candidate in Marketing at the London School of Economics (LSE), is answering these questions through a project that combines economic modeling, behavioral science, and artificial intelligence. His research explores how businesses can redesign privacy communication not just for compliance—but to build trust, retain customers, and reduce environmental impact.

"There’s growing recognition that privacy isn’t just a legal obligation, it’s a user experience," Zhong explains. "And how users emotionally respond to privacy policies has real business consequences."

The initiative began with a formal economic model: What happens to customer behavior and firm profit if a company adopts a more sustainable, user-respecting data strategy? Zhong's simulations showed that when companies collect less data, explain their policies clearly, and give users control, they don't just meet legal standards—they gain deeper trust and longer-term loyalty.

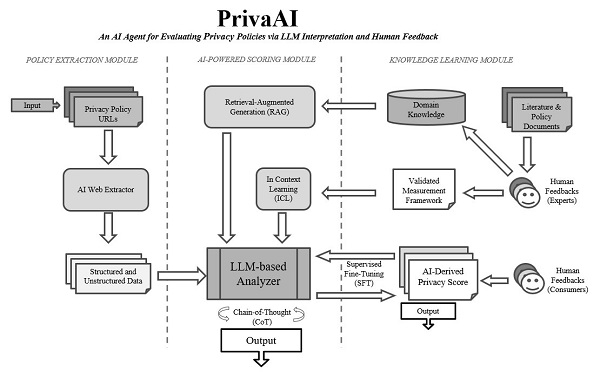

Image: System architecture of PrivaAI

Building on that, Zhong created PrivaAI, a tool that evaluates how consumers perceive privacy policies across eight psychological and ethical dimensions: transparency, control, readability, fairness, value exchange, tone, legal framing, and sustainability. Using a fine-tuned large language model (LLM), PrivaAI processes privacy policies and outputs a user-facing scorecard. These scores are derived not from compliance checklists, but from real-world behavioral data.

That data came from Zhong’s lab experiments. In a series of studies, participants read variations of the same privacy policies while undergoing eye-tracking and emotion recognition through facial analysis. Some policies used vague legal language; others emphasized user choice, ethics, or sustainability. Afterward, participants rated their impressions of clarity, trustworthiness, and fairness.

"We found that specific language shifts—even a few words—can strongly impact how users feel about a company," Zhong explains. "It’s not just what you say, but how you say it."

These findings fed back into the AI model, training it to approximate how the average user might respond emotionally and cognitively to different types of privacy disclosures. Unlike most automated privacy analysis tools that focus on legality or readability alone, PrivaAI measures perception. It helps companies understand how their policies make people feel—and adjust accordingly.

But PrivaAI is more than just a policy evaluator. It is also a benchmarking engine. The platform can generate distributional reports across industries, enabling companies to compare their performance to peers and competitors. Whether in fintech, healthcare, e-commerce, or education, organizations can visualize where they rank in transparency, control, tone, and ethical design—all through the lens of user perception.

"PrivaAI offers not just feedback, but context," says Zhong. "You can see how users respond to your competitors’ policies and to the leaders in your industry. That turns privacy into a strategic asset."

The project has a broader ambition: contributing to sustainable data strategies. The global digital economy increasingly runs on user data, yet data centers alone consume more energy than the airline industry. In fact, the cloud now has a greater carbon footprint than aviation. A single data center can consume as much electricity as 50,000 homes.

"We shouldn’t be collecting more data than we need, especially if users don’t feel comfortable sharing it in the first place," Zhong says. "A clearer, fairer privacy policy doesn’t just reduce user churn—it reduces carbon footprints."

By designing privacy communication that aligns with human psychology, Zhong hopes to influence how organizations approach data governance more broadly. His work offers a rare fusion of ethical design, consumer psychology, machine learning, and environmental thinking. PrivaAI enables firms to optimize their privacy strategies not just around what’s legal, but around what’s human and sustainable.

Zhong's research has been presented at major academic conferences, including the American Marketing Association Annual Conference (AMA), the European Marketing Academy Conference (EMAC), and the INFORMS Marketing Science Conference (ISMS). He is currently completing his PhD at LSE, where his doctoral training also spans London Business School and Imperial College London.

In recognition of his interdisciplinary contributions, Zhong has been appointed Visiting Professor at the Southwestern University of Finance and Economics (SWUFE) in China, where he is helping to develop new curriculum in AI governance, data ethics, and responsible digital innovation.

His work, grounded in rigorous research and driven by real-world needs, presents a new framework for responsible data use in the age of AI. With PrivaAI, Zhong hopes to inspire a global shift toward privacy policies that are clear, empathetic, and environmentally aware.

More information on Zhong’s research and initiatives can be found at zzhong.io.

Media Contact

Contact Person: Ziqi Zhong

Email: Send Email

Country: China

Website: https://zzhong.io