Z.ai (formerly Zhipu) has released GLM-4.5, marking the company's entry into open-source Mixture-of-Experts (MoE) modeling with a series designed specifically for autonomous AI agents. The launch positions these models as competitive alternatives to proprietary offerings from OpenAI, Anthropic, and Google.

Two-Model Release Strategy

The GLM-4.5 family includes two variants optimized for different computational requirements:

1. GLM-4.5: The flagship model with 355 billion total parameters and 32 billion active parameters

2. GLM-4.5-Air: A more efficient variant with 106 billion total parameters and 12 billion active parameters

Both models are accessible through multiple channels, including Hugging Face, OpenRouter, Z.ai API Platform, and Z.ai Chat, ensuring broad developer access.

MoE Architecture Drives Efficiency

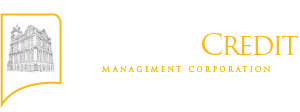

The models leverage Mixture-of-Experts architecture to achieve substantial computational efficiency. Rather than engaging all parameters simultaneously, the system dynamically routes tasks to specialized neural network components. This approach enables GLM-4.5 to activate only 9% of its total parameters (32B of 355B) during inference, while GLM-4.5-Air operates with just 11% activation (12B of 106B). The architecture delivers performance comparable to larger dense models while maintaining operational costs similar to much smaller systems.

Top Performance on Key Benchmarks

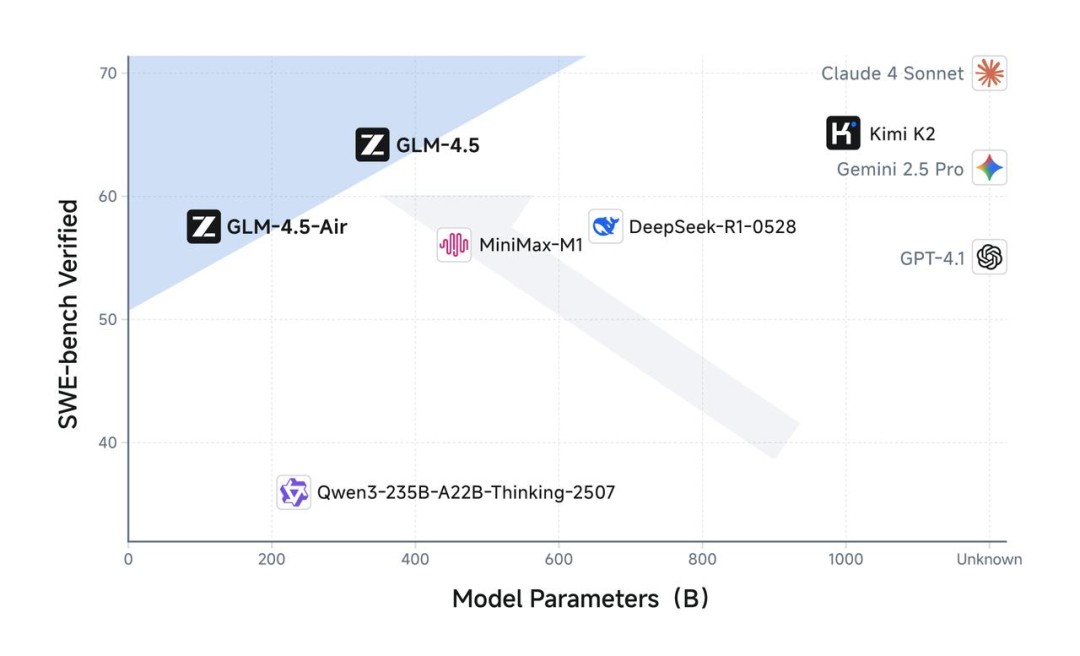

Z.ai backed its launch with a suite of benchmark results that place GLM-4.5 among the world's top-performing models—both open and closed source.

Agentic

GLM-4.5 demonstrates strong performance in autonomous agent tasks, matching Claude 4 Sonnet on TAU-Bench and BFCL v3 evaluations. Notably, the model achieved 26.4% on BrowseComp—a complex web navigation benchmark—surpassing Claude 4 Opus's 18.8% score. Tool invocation reliability reached 90.6%, exceeding both Claude (89.5%) and Kimi-K2 (86.2%).

Reasoning

The model shows exceptional analytical capabilities with 98.2% accuracy on MATH 500 problems and 91.0% on AIME 2024 mathematical competition tasks. Performance on general reasoning benchmarks includes 79.1% on GPQA and 84.6% on MMLU Pro, placing it among the top three models globally and first among open-source alternatives.

Coding

In programming evaluations, GLM-4.5 scored 64.2% on SWE-bench Verified, outperforming GPT-4.1 and approaching Kimi-K2's results. The model achieved 37.5% on Terminal-Bench, demonstrating competency in command-line environments and multi-file programming scenarios.

Open access, enterprise-ready, and affordable

Z.ai is making a clear push for widespread adoption with its licensing and pricing strategy. Both models are released under the Apache 2.0 license, allowing free commercial use, modification, and redistribution—offering developers maximum flexibility. Also, the models are globally accessible. They can be self-hosted via Hugging Face and ModelScope or Z.ai’s global API, which is fully compatible with the Claude API endpoint, making integration seamless.

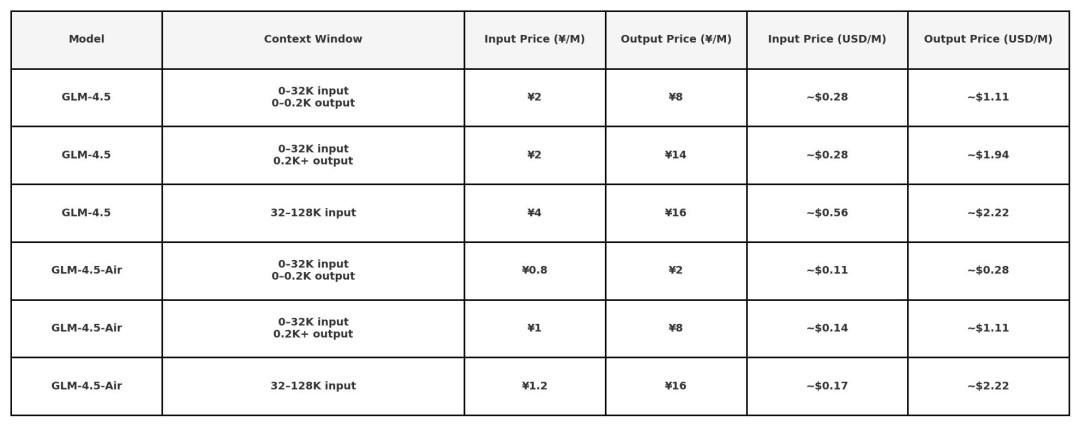

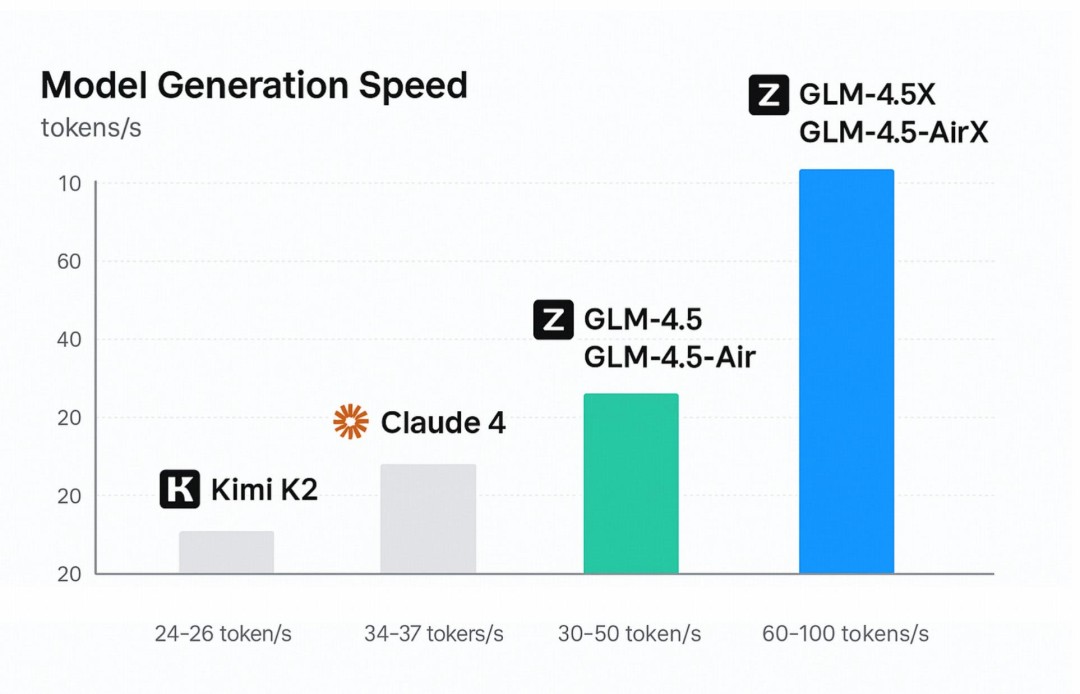

In terms of pricing, Z.ai is setting a new benchmark with aggressively low rates—approximately $0.11 per million input tokens and $0.28 per million output tokens, significantly undercutting major competitors. Moreover, it also delivers impressive inference performance, reaching speeds of over 100 tokens per second in high-speed mode and averaging 40–50 tokens per second in default mode, making it ready for large-scale, production-grade agentic deployments.

With this combination of strong performance, developer-friendly integration, and radically affordable access, Z.ai’s GLM-4.5 is about to become a foundational pillar for the next generation of AI development.

The GLM-4.5 release represents Z.ai's strategic positioning in the evolving AI landscape, targeting developers and enterprises seeking greater control, transparency, and cost-effectiveness compared to closed-source alternatives. The open-source approach provides auditability and customization options while delivering performance competitive with proprietary models.

Media Contact

Company Name: Z.ai

Contact Person: Zixuan Li

Email: Send Email

Country: Singapore

Website: https://z.ai/chat